2b. Constrained Adversarial DGMs (C-AdvDGMs): Realistic Attacks with Generative Models

Extending C-DGMs to Safely Test Model Vulnerabilities

In our previous post, we introduced Constrained Deep Generative Models (C-DGMs), which were designed to generate tabular data that not only looks realistic but also respects the rules of the domain. While this was an important step toward producing trustworthy synthetic data, it naturally led to a new question: can these models be used to probe the weaknesses of tabular ML systems?

In this post, we explore exactly that. By extending C-DGMs to generate adversarial examples, we arrive at Constrained Adversarial DGMs (C-AdvDGMs). By enforcing domain constraints, these examples remain valid and realistic, reflecting the types of inputs a model might encounter in practice and making the attacks practically relevant. This approach is described in our work Deep Generative Models as an Adversarial Attack Strategy for Tabular Machine Learning, which presents the methods behind C-AdvDGMs.

Recap. Constrained Deep Generative Models (C-DGMs) integrate a Constraint Layer (CL) that enforces domain rules through a repair strategy. Given the constraints and feature ordering, the CL minimally adjusts any generated samples that violate the rules, ensuring they are valid while preserving the underlying data distribution. For example, it guarantees relationships like total_income = salary + bonus + other components are maintained.

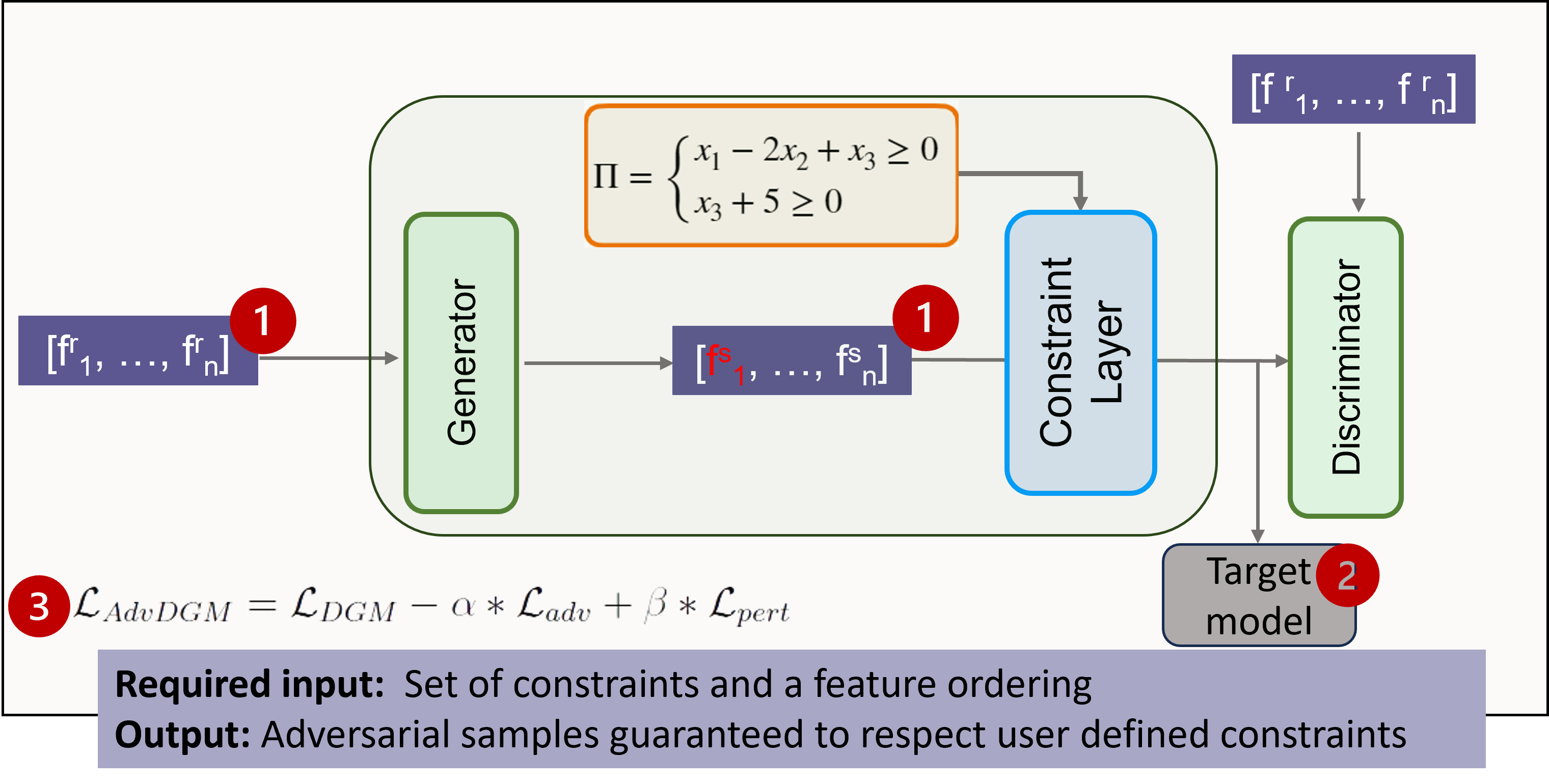

The figure above illustrates the overall framework of C-AdvDGMs. The main modifications from a standard DGM are highlighted by the red numbers:

-

Constraint-Aware Generation from Initial Input. Instead of creating data from scratch, the generator takes an existing sample and applies small, targeted perturbations. The constraint layer ensures the output follows all domain rules, keeping samples valid and realistic.

-

Target Model Evaluation. In C-AdvDGMs, the generated examples are additionally tested on a target classifier to evaluate how effectively they fool the model, a step that is not present in standard DGMs.

-

Optimized Training. The initial C-AdvDGM loss is extended into a combined loss function that balances four objectives: preserving data realism, maximizing adversarial success, controlling perturbation size, and enforcing user-defined constraints.

To revisit our initial question, we wanted to see if C-AdvDGMs could expose weaknesses in tabular ML systems.

What we discovered: Across the four types we tested, performance varied quite a bit. Only one model managed a competitive adversarial success rate (ASR), and even it was often outperformed by gradient-based and search-based attacks, ranking second at best. What really stood out was the role of the Constraint Layer (CL). Including it during training or sampling consistently boosted success rates, showing just how important it is to enforce domain knowledge when generating realistic adversarial examples.

C-AdvDGMs introduce a novel way to generate realistic adversarial examples by extending deep generative models with constraint-aware generation. While they do not always match traditional attacks in terms of raw success rates, they produce adversarial samples that are valid, plausible, and aligned with real-world domain constraints. At the same time, C-AdvDGMs represent just one path toward realistic adversarial attacks. Alternative approaches such as adapting gradient-based, gradient-free, or learning-based attacks to respect feasibility constraints remain promising directions for improving overall attack strength.

By combining constraint-aware generation with advances in other adversarial techniques, researchers can build a more comprehensive toolkit for adversarial testing. This opens the door to more reliable robustness evaluations for tabular ML systems, particularly in high-stakes tabular domains like finance, healthcare, and security.

Note: This blog series is based on research about the realism of current adversarial attacks from my time at the SerVal group, SnT (University of Luxembourg). It’s an easy-to-digest format for anyone interested in the topic, especially those who may not have time (or willingness) to read our full papers.

The work and results presented here are a team effort, including Asst. Prof. Dr. Maxime Cordy, Dr. Thibault Simonetto, Dr. Salah Ghamizi, (to be Dr.) Mohamed Djilani, (to be Dr.) Mihaela C. Stoian and Asst. Prof. Dr. Eleonora Giunchiglia.

If you want to dig deeper into the results or specific subtopics, check out the papers linked in each blog post.

Enjoy Reading This Article?

Here are some more articles you might like to read next: