The Forgotten Domain: Adversarial Machine Learning for Tabular Data

What happens when the most common data format in ML is also the least protected?

Machine learning is now embedded in some of the highest-stakes decision systems: credit approvals, fraud detection, cybersecurity alerts, and even medical diagnoses. And what powers many of these systems behind the scenes? Not images or text but rows and columns. Spreadsheets. Tabular data.

Yet when it comes to AI security, especially in the context of adversarial machine learning, tabular models have been overlooked. While the security of vision and NLP models get the headlines, the models most often deployed in industry (decision trees, gradient-boosted models, tabular neural networks) get far less attention when it comes to adversarial threats.

That gap matters. Because if we want trustworthy AI, we can’t ignore the security risks in the systems we actually use.

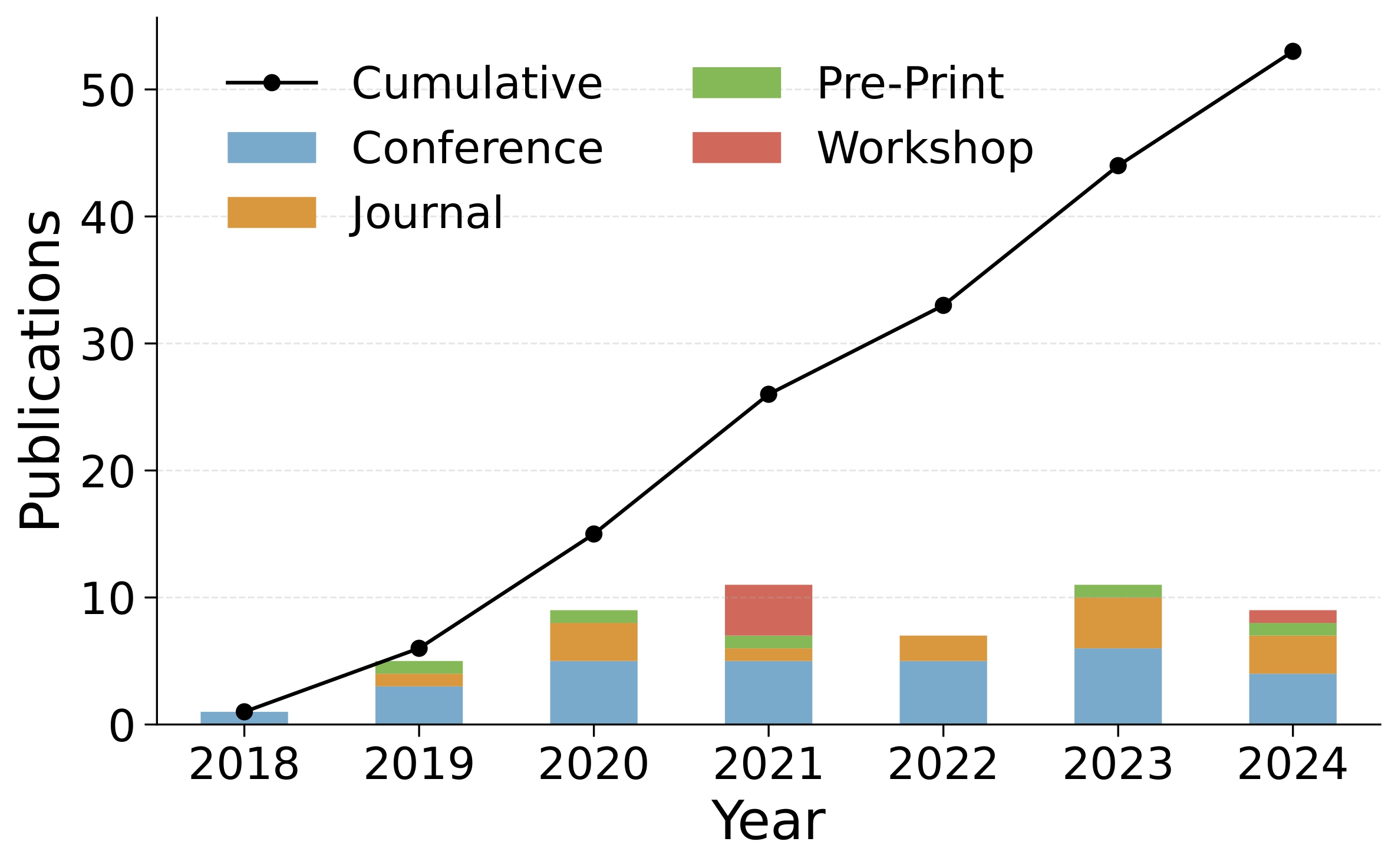

To better understand this overlooked domain, we conducted the first systematic literature review (SLR) focused entirely on adversarial attacks against tabular machine learning. Our paper, “Insights on Adversarial Attacks for Tabular Machine Learning via a Systematic Literature Review”, is now available on arXiv.

We analyzed 53 papers published between 2018 and early 2025 to answer three main questions:

- What does the research landscape look like?

- How are these attacks constructed?

- Do they actually work under real-world constraints?

1. A Niche Field, Fragmented and Domain-Heavy

Interest in adversarial attacks on tabular ML models grew steadily from 2018 until around 2021, but has since stagnated. The field is still small and fragmented: the 53 papers we found are spread across 46 different venues, with little overlap.

About half of the research proposes general-purpose attacks, while the rest focuses on specific domains. Cybersecurity dominates the field, perhaps unsurprisingly, given the naturally adversarial environment and the higher risk of attacks. Finance comes next, with a few papers tackling credit scoring and fraud detection.

But overall, this is still a fragmented frontier with no central community.

2. Attack Design: Many Techniques, Few Standards

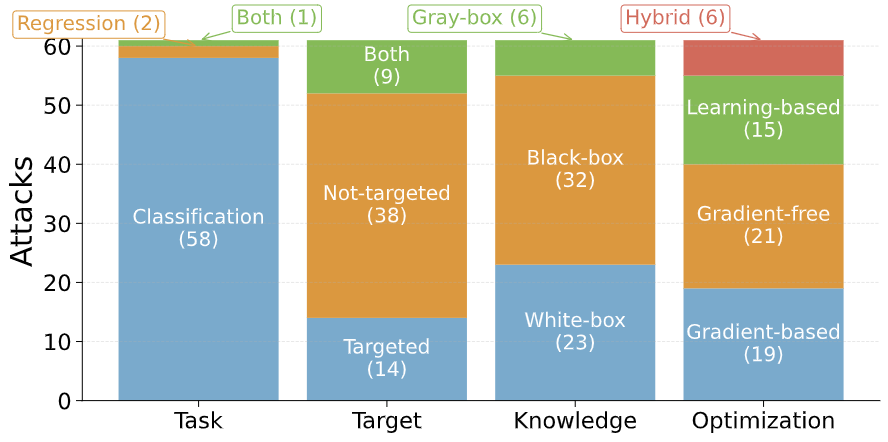

We looked at 61 unique attacks proposed in the reviewed papers and found both clear patterns and notable gaps.

Most attacks target classification problems, especially binary classification, even though many real-world tabular applications (like pricing or forecasting) involve regression or multi-class outputs.Interestingly, about half the attacks assume black-box access. That’s a good sign: it reflects more realistic threat scenarios. In terms of design, most attacks can be grouped into four high-level strategies:

- Gradient-based: Mostly borrowed from computer vision attacks (e.g., PGD, C&W) but adapted for tabular constraints.

- Gradient-free: Include rule-based methods, evolutionary search, and other heuristics. These are flexible but often inconsistent.

- Learning-based: Often built with GANs, especially in cybersecurity contexts. But many of these reinvent the wheel and rarely compare to prior work.

- Hybrid: Combine strategies, e.g., switching from gradient optimization to search heuristics. Still rare, but promising.

One persistent issue is low reproducibility: only about one-third of the attacks provide public code. This lack of openness makes it difficult to compare methods fairly and slows down progress in the field.

3. Do These Attacks Work in the Real World?

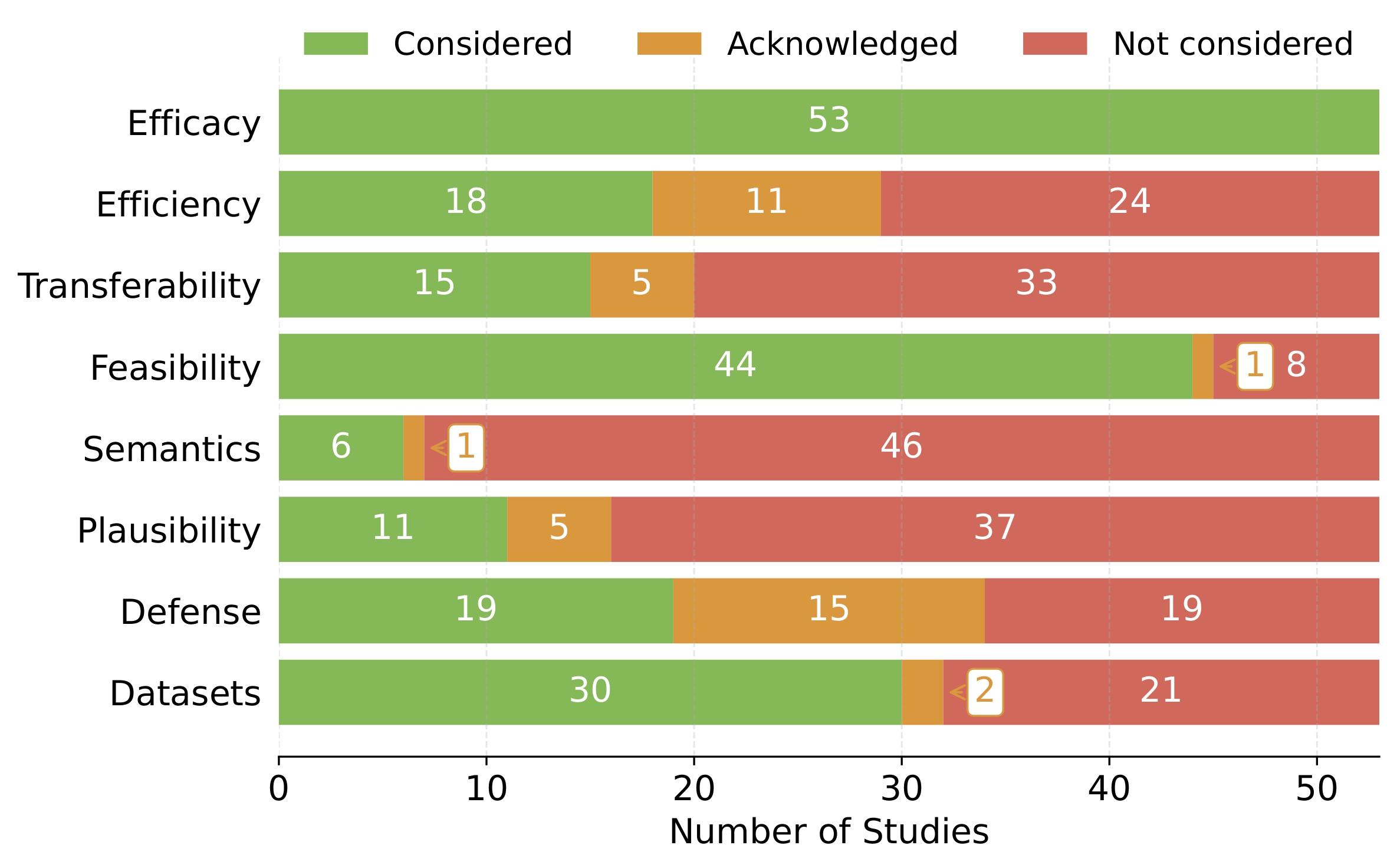

It’s one thing to demonstrate an attack in a benchmark setting. It’s another to successfully execute that attack in real-world environments where inputs are constrained, systems are monitored, and decisions often reviewed by humans. We evaluated each paper against eight practical criteria measuring not just success, but real-world viability.

- Efficacy: All papers measure this, usually via attack success rate or accuracy drop. But success on clean benchmarks doesn’t guarantee usefulness in live systems.

- Efficiency: Very few papers report runtime or resource costs. Yet many attacks (especially heuristic ones) could be too slow to be practical.

- Transferability: Most attacks target a specific model. Few check if adversarial examples work across architectures, which is key in black-box settings.

- Feasibility: Some attacks impose practical constraints (e.g., value ranges or valid encodings), but many assume idealized settings, ignoring feature accessibility, feature dependencies, or domain-specific rules.

- Semantic Preservation: Does the modified input still make sense? A technically valid change (e.g., flipping “employed” to “unemployed”) may pass unnoticed by a model, but could violate the underlying meaning or ground truth of the example.

- Plausibility: Would a human reviewing the input find it believable? In computer vision this is important, but only a few studies on tabular ML check for it. Some even argue plausibility may not be relevant for tabular attacks, reflecting an ongoing debate in the field.

- Defense Awareness: Attacks are often tested on unprotected models. In reality, defenses like input validation, adversarial training, and monitoring are common.

- Dataset Suitability: Too many studies rely on small, outdated academic datasets (like Iris or Wine) that don’t reflect real-world complexity or risk.

Most papers addressed efficacy, and some considered feasibility. But beyond those, few went further. Most studies covered just two to six of the eight practical criteria, with four being the most common.

What We Learned

Adversarial attacks on tabular models aren’t just academic exercises. In fields like finance, healthcare, and cybersecurity, these vulnerabilities can have real-world consequences. And yet, the field remains immature.

We found innovative techniques tested only in idealized settings, with limited practical relevance. Many papers measure success as a drop in model accuracy, but rarely ask deeper questions: Is the attack realistic? Is the input plausible? Would it even be accepted by the system?

We also found a lack of shared standards. Key concepts like semantic preservation and plausibility are used inconsistently and often left undefined. Code is frequently missing. Benchmarks are almost non-existent. And most attacks are tested on just one dataset and undefended models, making comparisons and cumulative progress difficult.

Where the Field Goes Next

Despite these issues, the space for meaningful research in this domain remains open, especially as paradigms like pretraining, multitask learning, and tabular foundation models continue to emerge. What’s needed now isn’t just more attacks, but a stronger research foundation:

- Clear, shared definitions of robustness in tabular ML

- Benchmarks that reflect real-world constraints

- Broader, more realistic datasets

- Reproducible code and cross-domain evaluations

If we want machine learning to be secure and trustworthy in critical systems, from credit scoring to malware detection, we need to stop treating tabular data as an afterthought.

It’s time to give it the attention, clarity, and care it deserves.

Enjoy Reading This Article?

Here are some more articles you might like to read next: