0. AI/ML Security Is Broken Without Realistic Adversarial Testing

You can’t secure a castle by testing it with cardboard swords.

Imagine testing the strength of a castle’s defensive walls with cardboard weapons. It sounds absurd, but this is often how adversarial testing in AI/ML is carried out today.

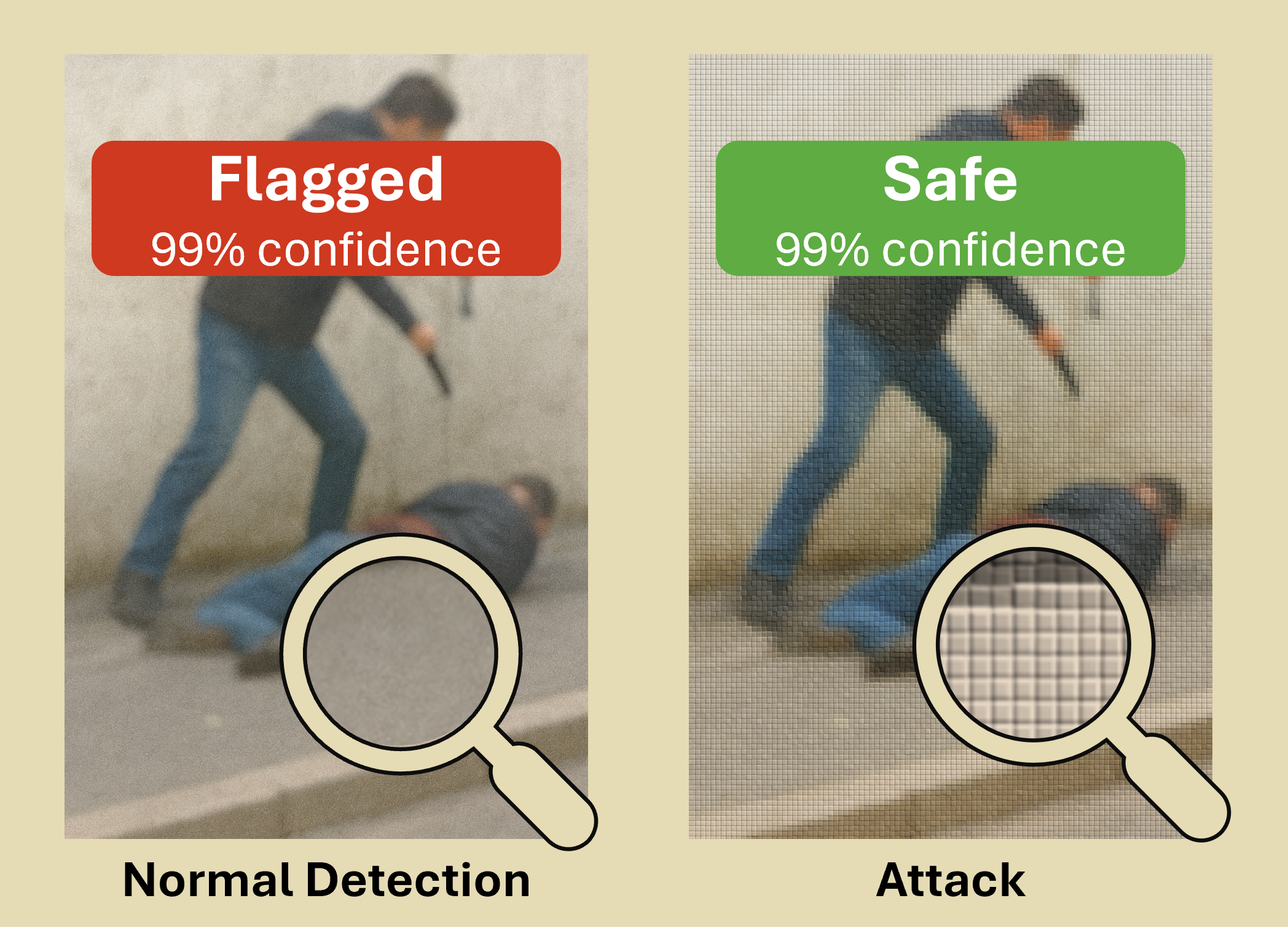

Now, instead of a castle, imagine social media platforms. They rely on AI/ML to filter harmful content such as violence, abuse, or nudity before it reaches your screen. But what happens if someone slightly alters a harmful image so the AI/ML cannot detect it, even though a human would clearly see the problem? That is the growing challenge of adversarial attacks.

An adversarial attack is a deliberately crafted input that deceives a machine learning model without fooling a human. To us, the image appears unchanged. To the model, it’s something entirely different. This happens because machine learning models don’t “see” the way we do. Instead of high-level conceptual features (like a face or the texture of an object), they rely on fragile mathematical patterns (such as pixel correlations), which can fail when exposed to the right perturbations.

It is important to note that the key word here is “right”. Not any random manipulation causes a model to fail; while deployed models are not perfect, they are strong enough that creating a successful adversarial example usually requires a carefully crafted input, often discovered through a time-consuming search process. In most cases, it’s not something that can be done casually or instantly.

The first clear demonstrations of adversarial attacks came in 2014 [1,2], when researchers showed that tiny, nearly imperceptible changes to images could cause deep neural networks to misclassify them. Since then, adversarial attacks have expanded to text, malware, financial transactions, and many other types of data. In practice, these attacks can have serious consequences: they allow malware to bypass antivirus engines, financial fraudsters to evade detection, and self-driving cars to misread traffic signs. A clear and present digital security threat!

The rise of these attacks (and others on AI/ML systems) has not gone unnoticed. Entire companies now exist solely to secure them. Take HiddenLayer [3], for example, a company founded by people whose firsthand experience with such attacks at an antivirus provider motivated them to develop defenses. Their strategy, like many others in the field, begins with adversarial testing, known in cybersecurity circles as “red-teaming.” This approach involves attacking your own models before anyone else can, uncovering weaknesses early and assessing the risks these systems might face.

But here’s the catch:

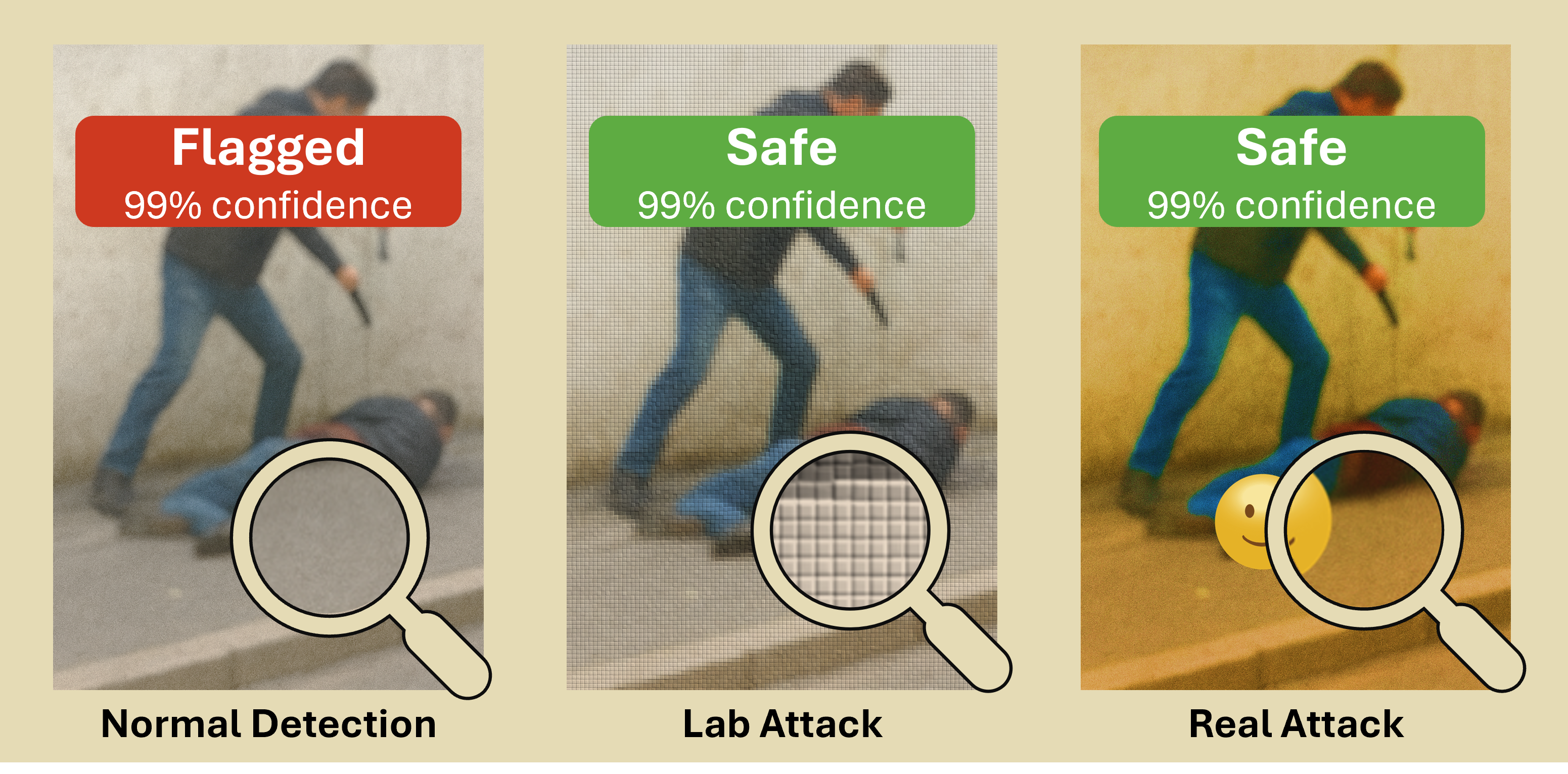

Most adversarial testing today is disconnected from the real world.

Researchers and engineers spend hours at their computers, crafting mathematically precise tweaks, small pixel changes in an image or character swaps in text. Easy to create and measure, yes, but far from the messy and creative tactics real attackers use.

Real-world adversaries think differently from controlled lab experiments. Their priorities are:

-

Results, not perfection. Tiny, elegant pixel tweaks or awkward emojis over an image, the choice does not matter. They care about whatever fools both the AI and any humans monitoring it.

-

Function above all. Every attack must still work in practice: transactions must process, malware must execute, sentences must make sense.

-

The full system. Attackers do not stop at the model. They probe user behavior, business logic, and human oversight, exploiting whichever link breaks first.

When adversarial testing ignores real-world attacks, it is like testing a castle’s walls with cardboard weapons. The walls may seem strong, but real threats go unchecked. The result is a false sense of security: AI systems that survive controlled tests may still fail against actual adversaries. That is why every defense must start with realism. Only by reflecting how attackers truly behave can we prepare models for the threats they will face in practice.

Realistic testing recreates practical conditions. It is not about making the process harder for researchers and AI/ML Security practitioners; it is about preparing for genuine risks rather than idealized scenarios. Security tests that ignore real adversary behavior are not just incomplete, they can be misleading.

This blog series is dedicated to closing the realism gap in adversarial testing and defense. Just as a castle cannot rely on cardboard weapons to prove its strength, AI systems cannot rely on idealized tests to ensure security. In the coming posts, we will explore how realism plays out across different domains, from financial fraud to malware detection, and why it is important not only for finding vulnerabilities but also for building resilient defenses that can withstand real-world adversaries.

1. Biggio et al., Evasion Attacks against Machine Learning at Test Time, ECML 2013

2. Szegedy et al., Intriguing properties of neural networks, ICLR 2014

Note: This blog series is based on research about the realism of current adversarial attacks from my time at the SerVal group, SnT (University of Luxembourg). It’s an easy-to-digest format for anyone interested in the topic, especially those who may not have time (or willingness) to read our full papers.

The work and results presented here are a team effort, including Asst. Prof. Dr. Maxime Cordy, Dr. Thibault Simonetto, Dr. Salah Ghamizi, (to be Dr.) Mohamed Djilani, (to be Dr.) Mihaela C. Stoian and Asst. Prof. Dr. Eleonora Giunchiglia.

If you want to dig deeper into the results or specific subtopics, check out the papers linked in each blog post.

Enjoy Reading This Article?

Here are some more articles you might like to read next: